exa-code

Want to skip to installing? Instructions here.

Fast, efficient web context for coding agents

Vibe coding should never have a bad vibe. But it often does because in 2025, LLMs still don't have great knowledge of the millions of libraries, APIs, and SDKs that are needed to write the best code.

Today, we're excited to release exa-code, the first web-scale context tool made for coding agents. Given a search query, exa-code returns the exact few hundred tokens from the web needed to ground coding agents with correct information.

Early evals show that exa-code is a state-of-the-art web tool for coding agents, owing to 1) Exa's search engine that is built from the ground up for AIs 2) how efficient the returned context is (hundreds of tokens, not thousands).

How it works

As just mentioned, the philosophy behind exa-code is that web context for coding agents must be extremely relevant and extremely dense. Behind the scenes, we heavily prioritize putting code examples into the context because they're efficient and effective. Here's how the full process works:

- Exa hybrid searches over 1B+ webpages, finding the most relevant webpages to the search query.

- Code examples are extracted from these webpages and reranked for relevance using an ensemble method that maximizes recall and quality.

- We return a concatenated string typically a few hundred tokens long if the code examples are enough to answer the query. Otherwise, we return full documentation pages that (e.g., the API page of some docs describes the API not in code but in English).

Examples

In this example, exa-code exemplifies how to set up a reproducible, Rust development environment using Nix. It uses fewer than 500 tokens and is triggered by including exa-code in the prompt.

.png)

Generally, an agent would find exa-code useful when:

- Updating an application's config.

- Calling API endpoints like for Exa or Slack.

- Using SDKs like Boto3 (AWS) or the AI-SDK.

Evaluating exa-code

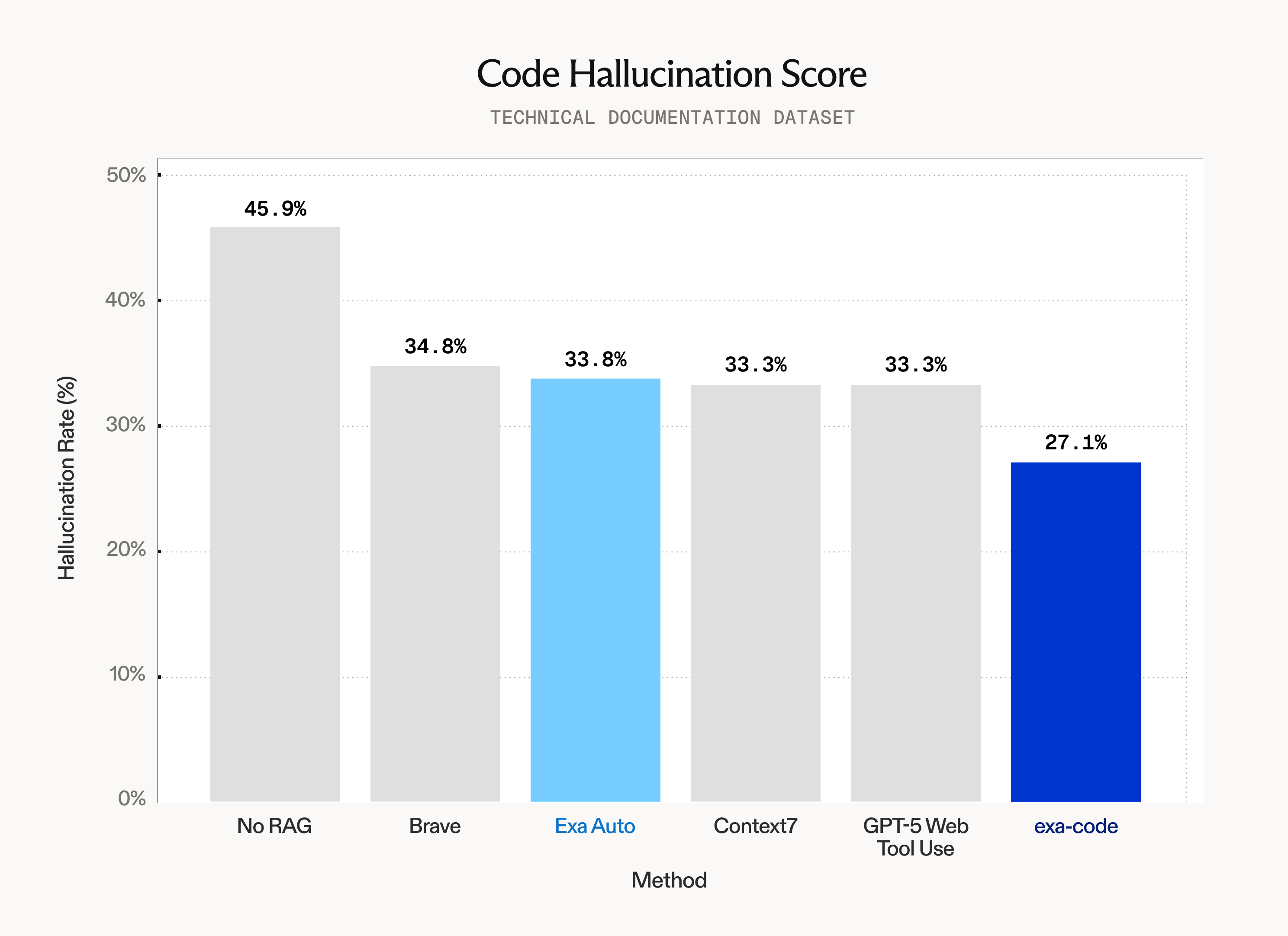

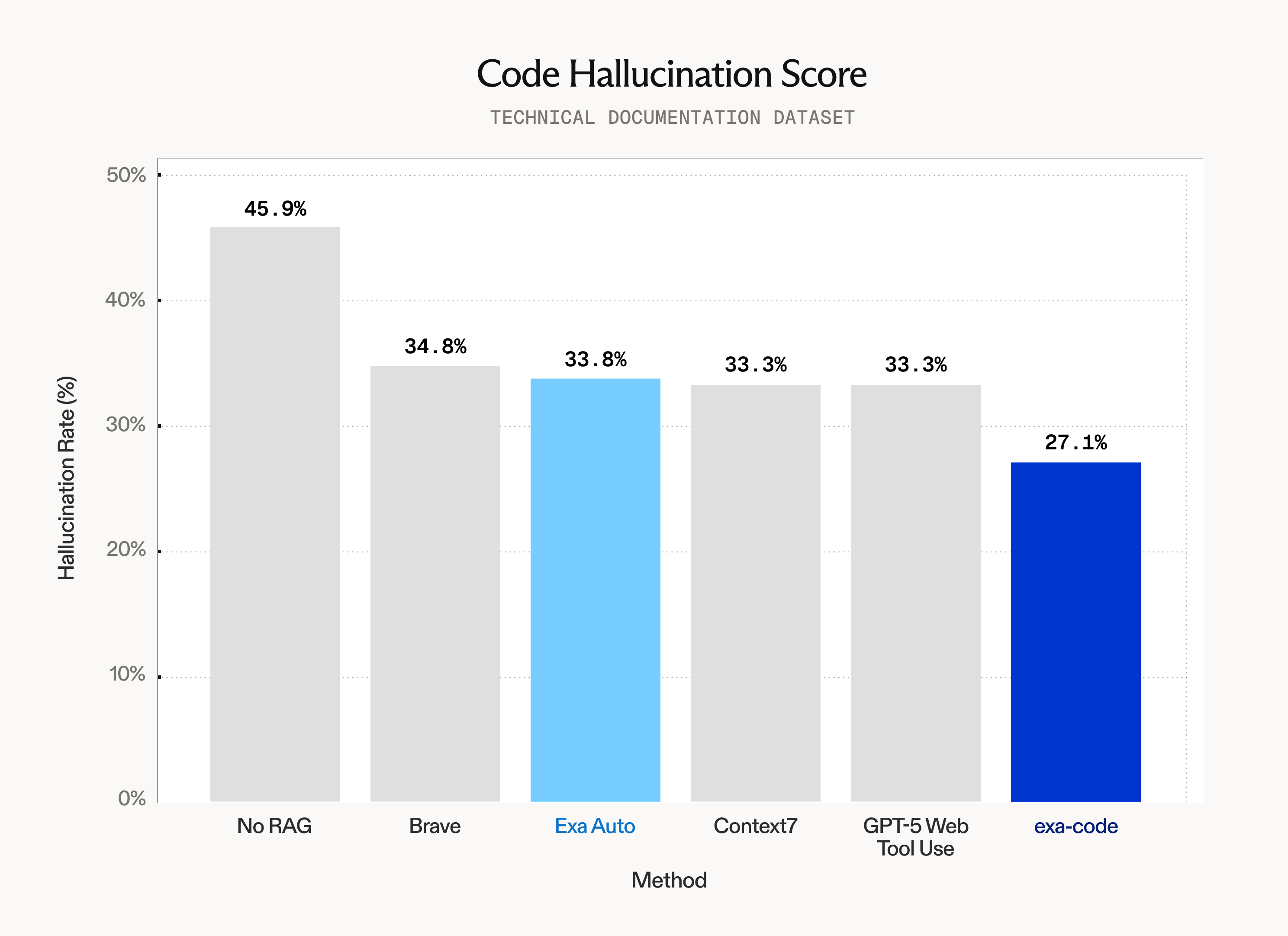

A better context tool will prevent more hallucinations. In order to benchmark Exa-code, we created an evaluation focused on finding the cases where LLMs hallucinate and measuring which context tools help the most. For a diverse sample of libraries, APIs, and SDKs, we find that Exa-code's context solves the most hallucinations while requiring the least tokens.

We find hallucinations by classifying LLM responses to code-completion tasks. The tasks are generated from documentation and instruct that code is generated to call a library/SDK function or API endpoint. Tasks include a dependency version if relevant. After the test model generates code, an LLM classifier will use the source documentation to detect common hallucinations in the generation. If given a context tool, the test model will generate a call to the context tool before generating code.

Generated from https://docs.prefect.io/v3/how-to-guides/workflows/assets, the following task produced a wrong-parameter hallucination after the test model used asset_uris which doesn't exist.

<testcase>

<task>

from prefect import flow

from prefect.assets import materialize

output_asset_uri = "s3://my-bucket/final-report.csv"

# Mark the function that generates the final report as one that creates or updates the specified asset URI to track workflow outputs

</task>

<answer>

@materialize(output_asset_uri)

</answer>

</testcase>

# test model output: hallucination

<answer>

@materialize(asset_uris=[output_asset_uri])

</answer>A dependency's Hallucination Score is the percentage of test cases for a library classified as hallucination. The following is the average hallucination score for a sample of popular and unpopular dependencies/endpoints given several context Tools.

According to this evaluation, exa-code eliminates more code hallucinations than the other popular context sources listed. The main difference between exa-code and other sources is that exa-code is optimized for the recall of code examples. It uses a dedicated code-example index sourced from GitHub and Exa's web index, as well as code-specific retrieval models. For coding tasks involving libraries/APIs, this appears to be highly effective.

Your LLM should never hallucinate.

Imagine if LLMs never hallucinated—if they could both write new software and use dependencies with extremely high competency. The mission of Exa is to unblock the knowledge bottlenecks of LLMs, and exa-code aims to do this for coding agents.

The Exa-code MCP is available free for the public on Smithery and docs.

Include "use exa-code" in your prompt to trigger the tool.